Raise your hand if you’ve ever come across a test case failing due to a recent year change. Sounds familiar? Well, here’s my story about a bug that waited for five years to cause my test suite to fail for the first time – and would wait for another eleven to do it again. Intrigued? Read on.

Let’s give you some context

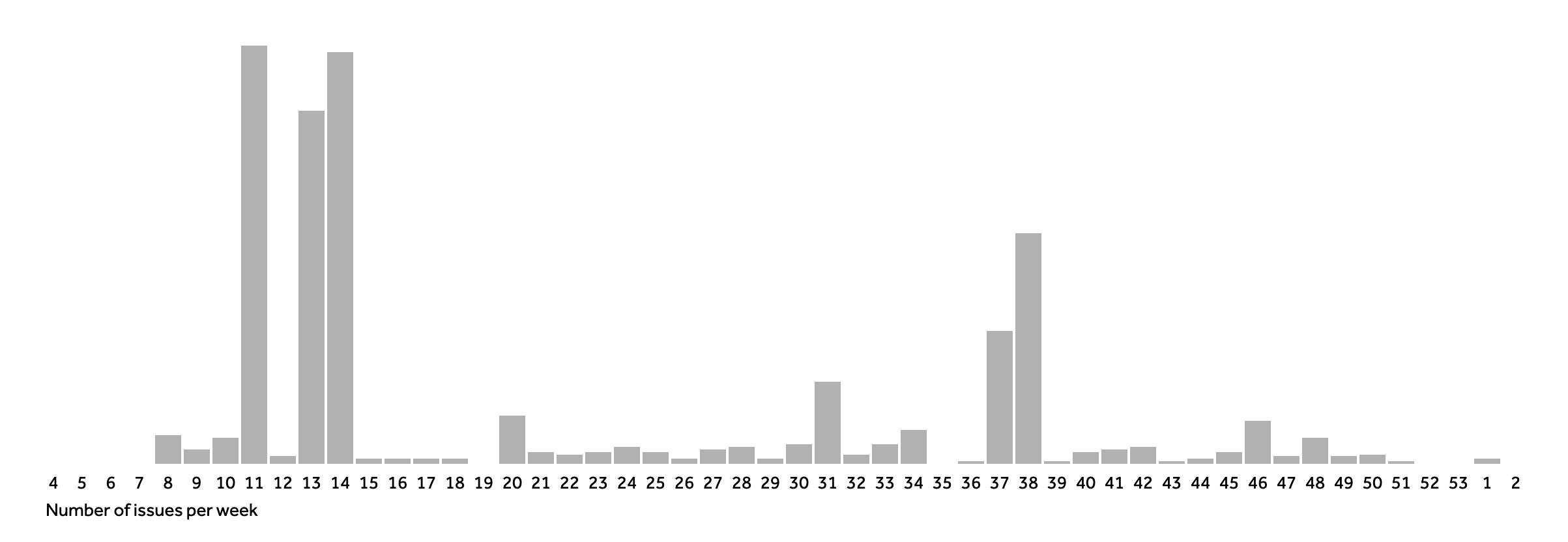

Some time ago I was working on a pretty complex issue tracking application used in many places across the world. One of its smaller features was an admin panel containing a bar graph with information how many issues were created throughout the year.

It was a standard Rails 5 application with a PostgreSQL 10 database. To store the issues – as you can probably guess – we had an issues table with a created_at column being a timestamp without time zone – a default in Rails.

In order to present that aggregated bar graph data, the application API provided a weekly_progress endpoint returning an array with each week’s statistic formatted as ["year - week number", number of issues], e.g.

[["2021-1", 13], ["2021-2", 4], ["2021-3", 1]]

Shall we see the code?

Let’s do it together. This gist presents a simplified excerpt of the code used in production. Please note I will not be focusing on refactoring this code in general, just taking a closer look on the date parts. There’s no need to analyse and understand it all at this very moment – just take a brief look and then join me in my journey to figure out what happened.

Once upon a December

Going back to work in January, right after my winter holidays in 2019 (yes, it’s been two years since I wanted to write this blog post…) I noticed the application test suite failing on our CI tool for every single branch and every single job for at least two days running. After taking a closer look, the error message was wishing me a happy new year! 🎉

Failures:

1) Stats#weekly_progress returns correct weekly stats

Failure/Error: expect(formatted_date).to eql([created_at.strftime("%Y"), created_at.strftime("%V").to_i].join("-"))

expected: "2019-1"

got: "2018-1"

(compared using eql?)

# ./spec/services/stats_spec.rb:25:in `block (3 levels) in <main>'

Finished in 4.15 seconds (files took 0.94939 seconds to load)

1 example, 1 failure

Typical… let’s see what the test contents look like:

describe '#weekly_progress' do

subject { Stats.weekly_progress(issue_ids) }

let(:issue) do

create(:issue).tap do |_issue|

# Make spec pass on a Sunday evening.

_issue.update_column :created_at,

(_issue.created_at.beginning_of_week + 1.day)

end

end

let(:issue_ids) { [issue.id] }

let(:stats) { subject[0] }

let(:formatted_date) { stats.first }

let(:issue_count) { stats.second }

let(:created_at) { issue.created_at }

it 'returns correct weekly stats' do

expect(subject.length).to eq(1)

expect(issue_count).to eql(1)

expect(formatted_date).to eql([created_at.strftime("%Y"), created_at.strftime("%V").to_i].join("-"))

end

end

We are creating an issue, running the Stats service and comparing the results with a formatted string. Sounds very standard, doesn’t it? Except for that one little comment we all noticed. Running git blame, I learned it was written on a December evening in 2014, when one busy programmer was working overtime on a Sunday…

# Make spec pass on a Sunday evening.

OK, so what I know so far is that the test is failing in two cases:

- With the fix, in the first week of 2019

- Without the fix, on a Sunday evening

I think we can all agree that the solution to the second problem was not the best. But what exactly does it do?

create(:issue).tap do |_issue|

# Make spec pass on a Sunday evening.

_issue.update_column :created_at,

(_issue.created_at.beginning_of_week + 1.day)

end

No matter when the issue was created, its created_at is updated and moved to Tuesday midnight of the same week. Interesting… Did I mention that 2019 happened to start on a Tuesday? And that it was the first time in five years that this test failed? And, coincidentally, that no year between 2014 and 2019 started on a Tuesday…?

Coincidence?

Let’s see how the year and week numbers are retrieved both in the test and in the Stats service. The test case uses the Issue model’s attribute and Ruby’s Date#strftime method, getting the year with %Y and the week number with %V.

[created_at.strftime("%Y"), created_at.strftime("%V").to_i]

The implementation, on the other hand, uses SQL to achieve the same:

<<-SQL

date_part('week', created_at) as week,

date_part('year', created_at) as year,

count(*) as total

SQL

Shouldn’t they both give me the same results? Well, as with everything in the programming world – it depends. The database keeps created_at in UTC, hence the date parts fetched with SQL will be in UTC as well. Conversly, Rails use the time zone set in their config and will convert all the dates to that time zone. If that will be UTC, we will notice no problems. So what was the time zone set in my test environment’s config?

config.time_zone = "Tokyo"

Bingo!

こんにちは

Japan Standard Time is 9 hours ahead of UTC. On Sunday evening in Europe it is already Monday in Japan. This means that a new week has started in Tokyo time, but it’s still the previous week in UTC – which explains the initial test failure being covered by always moving the issue’s created_at to Tuesday. Sneaky little hacking!

Now, what will happen if a Tuesday falls on January 1st, as in 2019? Well, all days in the first week of the year after that Tuesday will be taken back in time to the exact moment the year changed in Japan. For Rails that would be Tue, 01 Jan 2019 00:00:00 JST +09:00, while for PostgreSQL it’s Mon, 31 Dec 2018 15:00:00 UTC +00:00.

The real fix

Or rather – shall I fix it? Let’s check when will be the next time this test fails. At the moment of writing this blog post, the next year starting with Tuesday is 2030. Nice, no need to rush. But seriously, is it the test or the code that’s faulty?

My initial approach was to fix the test, by retrieving the date in UTC – that’s how the code works in the end, right? Is the week number at a given moment the same all over the world though? It relies on the date and the date changes along with time zones, so the answer is NO, we need to fetch the SQL data with regards to time zones.

Think twice

Checking how to express PostgreSQL timestamps in a specified time zone, I got to the AT A TIME ZONE function. I tried using it on my timestamps as:

<<-SQL

date_part('week', timezone(#{zone}, created_at)) as week,

date_part('year', timezone(#{zone}, created_at)) as year,

count(*) as total

SQL

It did not go exactly as I expected – PostgreSQL treated my timestamps as if they already were in the zone I specified and then returned them in my local time zone. OK, so how should I fix this properly?

Not gonna lie, I checked StackOverflow. This answer gives a very detailed explanation to the problem, focusing on the differences between timestamp with time zone and timestamp without time zone. As already mentioned, by default, Rails will use the second one for timestamps – hence our created_at is of that type as well.

As suggested, in order to get the date expressed in a specified time zone, I need to first cast my timestamp to one with time zone. I can do that either by using the :: operator – created_at::timestamptz – or by running AT A TIME ZONE once. After that I need another AT A TIME ZONE to do the actual time conversion.

<<-SQL

date_part('week', timezone(#{zone}, created_at::timestamptz)) as week,

date_part('year', timezone(#{zone}, created_at::timestamptz)) as year,

count(*) as total

SQL

Will it work?

Let’s run the test on Sunday evening.

.

Finished in 0.074 seconds (files took 0.99628 seconds to load)

1 example, 0 failures

Success, we are getting the correct week there. I can safely remove the initial fix hack and everything should work like a charm. Why is there a second part to this story then? If you wanna know what 2020 have brought to the party, be sure to check it out!