Two months ago, a headline hit the news aggregators all around: “Scaleway slashes IaaS prices by 70%”.

For a mere $3 per month, you could now rent an ARM server in the cloud, complete with 50 GB of storage. Not just a VPS, mind you, but a physical dedicated PCB the size of a Raspberry Pi with a quad-core ARMv7 CPU and 2 gigs of RAM. With loads upon loads of such servers mounted in custom-made racks.

Neat. But does the Scaleway C1 actually perform well? Is it a viable option for hosting small Rails apps? How does it fare against other cheap cloud server providers, such as DigitalOcean’s lowest-grade x86 server at $5/mo? ARM-based processors aren’t exactly herolds of performance, but does having four physical cores offset the lower per-core throughput? We promptly fired instances of both machines and embarked on a quest to find out.

The contenders

Everyone loves side-by-side comparison tables, so let’s start with one.

| Scaleway C1 | DigitalOcean 512MB | |

|---|---|---|

| CPU | Marvell PJ4Bv7 Revision 2 (ARMv7), 4 cores @ 1.33 GHz | Intel Xeon E5-2630L, 1 core @ 2.40 GHz |

| Memory | 2 GB | 512 MB |

| Disk space | 50 GB LSSD | 20 GB SSD |

| Transfer | Unlimited | 1 TB |

One thing that merits attention in this comparison is the “LSSD” storage type Scaleway boasts. LSSD stands for “Local Solid State Drive”. Despite being dubbed “local”, these drives are not directly attached to your machine; instead, they are mounted over the Network Block Device (NBD) protocol using dedicated wiring.

Performance tests

We performed a number of benchmarks from the Phoronix Test Suite on both machines, testing both CPU-bound and I/O-bound code. I’m not going to reproduce all results here; rather, I’ll focus on selected benchmark results that highlight the patterns underneath. For those interested, the full output from Phoronix can be downloaded here.

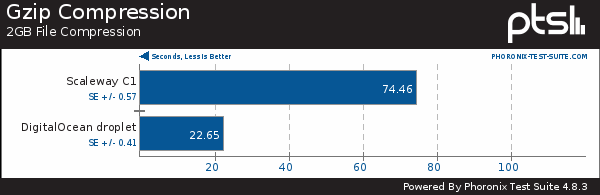

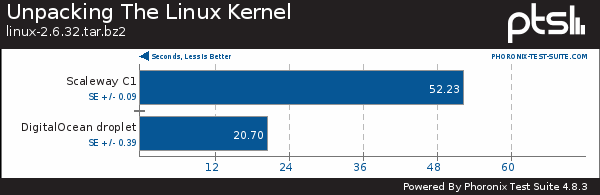

Without further ado, let’s get to the results. A good indicator of real-world CPU performance is data compression. This is how quickly both machines managed to gzip a 2 GB file and unpack the Linux kernel sources from a .tar.bz2 archive, respectively:

DigitalOcean takes the clear lead in both categories, with the Intel box being able to accomplish the compression in less than a third of Scaleway’s time and decompression in less than half. It is important to remember, though, that this only measures single-core performance (neither gzip nor bzip2 are able to take advantage of multiple CPUs). An interesting benchmark would be to see how well xz-utils would perform on Scaleway, as its recent versions support multithreaded operation.

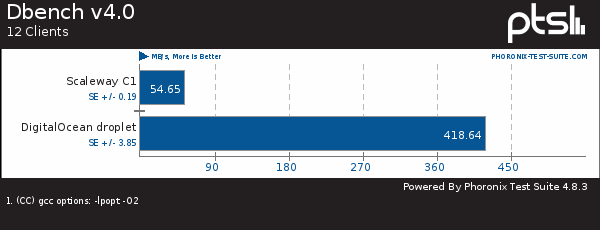

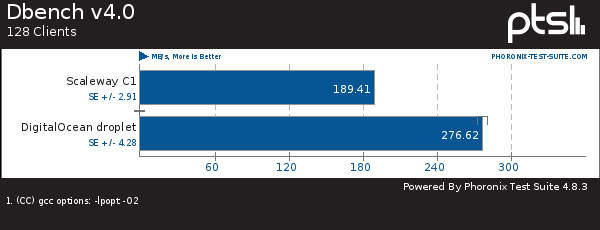

Turning our attention to I/O-bound tasks, we notice that things are not looking good for Scaleway on this front, either. Benchmarks range wildly here, from Scaleway’s performance being “only somewhat worse than DigitalOcean” to “much worse” to “catastrophic”. Here’s Samba’s DBench suite performing an arbitrary but diverse sequence of I/O operations with 12 and 128 clients, respectively:

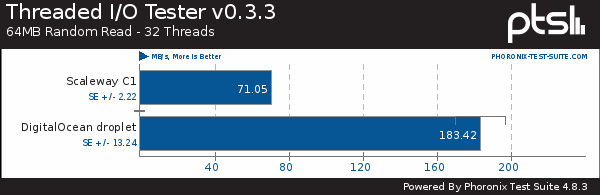

Things are happening in multiple processes here, so increased concurrency helps a lot. But here’s what the Threaded I/O Tester has to say on its random read benchmark:

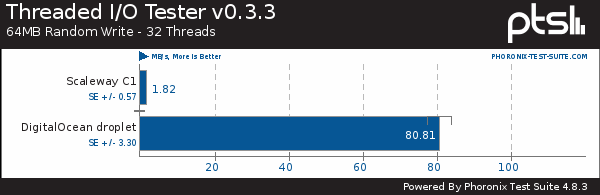

Or, $deity forbid, random write:

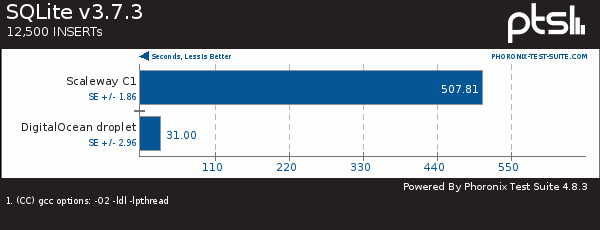

This last result seems particularly devastating. A plausible hypothesis is that disk I/O patterns involving a lot of fseek()s are inherently sensitive to latency, and this is much higher in case of Scaleway due to the LSSD/SSD distinction and the physical distance between the storage units and the CPU. Clearly, this merits more thorough analysis than is in scope of this post. However, results of the SQLite benchmark with a sequence of INSERTs seem to corroborate this:

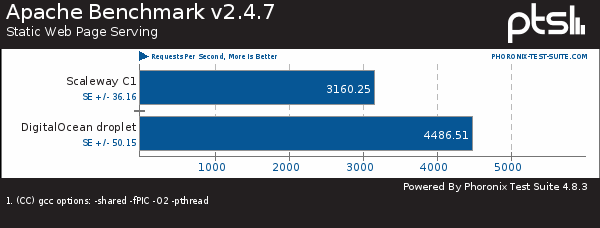

Finally, let’s have a look at another all-time favourite, the performance of a Web server. Apache is only around 30% slower in a static-page scenario, serving 3160 req/s on Scaleway as opposed to just under 4.5Kreq/s on DigitalOcean:

In conclusion of this section, I’d like to point out that even though the benchmarks indicate the C1’s performance to be much less than DigitalOcean’s, the former is actually much more consistent and predictable (note the small standard deviations on all charts). This is likely an effect of Scaleway offering physical machines of which you are the sole user if you opt for one, as opposed to DigitalOcean’s virtualized servers.

Benchmarking Rails

So far, so good. Or, should I say, so far, so bad (for Scaleway), as the results aren’t looking optimistic for that platform. But we’re here for Rails, aren’t we? Phoronix doesn’t help much in the area of assessing Rails performance, so I’ve installed one of our in-house Rails apps on both boxes, running on Unicorn in each case. The app was very simple, with just of a handful of views in it.

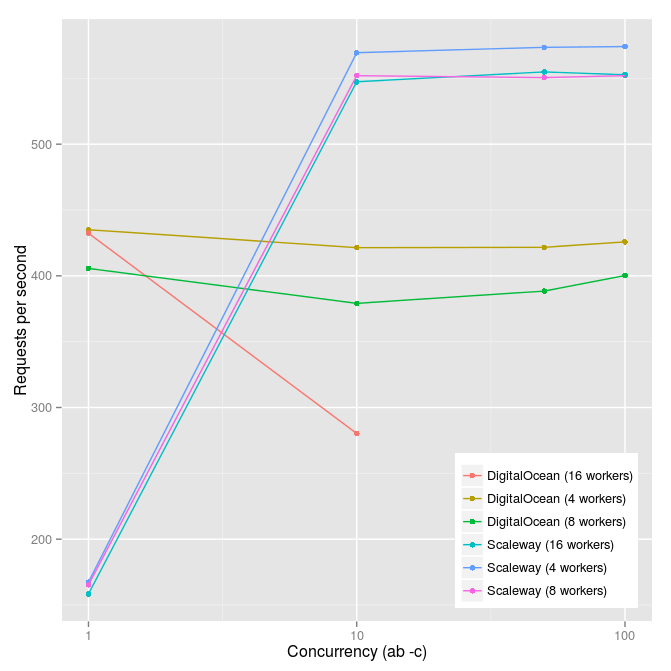

I then ran ab against the app in various configurations, altering the number of concurrent requests to the Rails app (ab’s -c option) and the number of Unicorn worker processes. As was expected, in the low-concurrency setting (one request at a time) Scaleway’s performance was vastly inferior to that of DigitalOcean. But at higher concurrency settings, Scaleway actually ended up outperforming DO:

The number of workers didn’t seem to matter much on Scaleway, with 4 (equal to the number of CPU cores) giving slightly better results than other configurations. Interestingly, the application bombed out with a SIGSEGV on DigitalOcean, but not on Scaleway, when run on 16 workers and undergoing a heavy workload with 50+ concurrent requests.

A look at the JVM

Just for fun, I decided to check how well the C1 plays with JVM and its languages (specifically, Clojure). I don’t have any benchmarks here, but the answer turned out to be: “surprisingly well.” After installing Java (Oracle’s Java 8 packages for ARM worked flawlessly), things Just Worked. I’ve installed Leiningen and ran my application without any problems. The only quirk is that the JVM only ran in Client VM mode, meaning a faster startup time (but still longish, on the order of several seconds) at the expense of less aggressive JIT optimizations.

Wrapping up

Time to revisit the question posited in the title: is the Scaleway worth it? The answer is, as usual, “it depends.”

Certainly not for anything mission-critical. The performance you’ll get out of a Scaleway is modest at best, and they don’t offer any other, more capable machines to upgrade to at the moment. Also, the SLA offered by Scaleway is at 99.9% with customer support available 24x5, so you may experience occasional downtime with nobody to help you out if you’re unlucky enough to hit the weekend.

Based on the I/O benchmarks above, I wouldn’t recommend these boxes for database servers that are expected to deliver acceptable performance for anything medium-sized or above.

However, for personal projects, simple Rails applications, spare backup space (the default 50 GB comes in handy here), or just for fun of hacking on ARM, I would say: go for it!